AI & Data Governance: Power with Responsibility - AI Security Risk Assessment - ISO 42001 AI Governance

In today's digital economy, data is the foundation of innovation, and AI is the engine driving transformation. But without proper data

governance, both can become liabilities. Security risks, ethical pitfalls, and regulatory violations can threaten your growth and reputation. Developers must implement strict controls over what data

is collected, stored, and processed, often requiring Data Protection Impact Assessment.

With AIMS (Artificial Intelligence Management System) & Data Governance, you can unlock the true potential of data and AI,

steering your organization towards success while navigating the complexities of power with responsibility.

Trust Is Built Through Governance

AI and Data Governance isn’t just about compliance — it’s about trust. When your systems are well-governed, customers feel safer,

stakeholders gain confidence, and your business operates with clarity and integrity. Governance is what turns data and AI into a reliable engine for sustainable success.

Embrace AI and Data Governance, where trust is the

currency, and governance is the key that unlocks sustainable success built on integrity and transparency.

Why Data Governance Matters

Your data is a strategic asset. Without clear rules and oversight, it can lead to costly mistakes and non-compliance. Strong Data

Governance ensures accuracy, privacy, and secure access across your organization — minimizing risk and enabling smarter decisions at every level.

Unleash the true potential of your data assets with robust

Data Governance, where accuracy, privacy, and security pave the way for intelligent decision-making and risk-free growth

Our Approach to Data Governance

At DISC InfoSec Group, we help businesses build robust Data Governance frameworks. From defining policies for data

collection and classification to ensuring compliance with ISO 27001, ISO 27701, ISO 42001, GDPR, HIPAA, and CCPA, we work with your teams to safeguard your data — and your future.

Trust DISC InfoSec Group to be your guardian of data

governance, where industry-leading expertise, unwavering compliance, and future-proof frameworks converge to secure your digital assets and pave the way for boundless

growth.

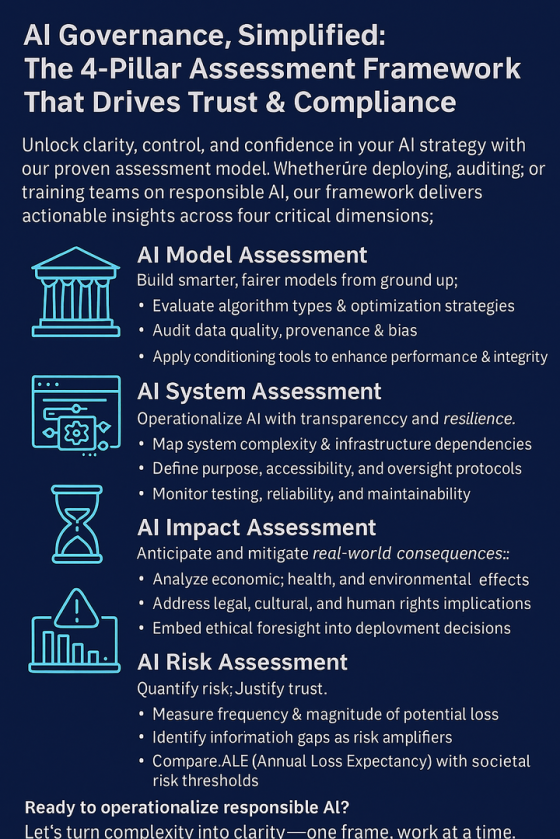

Managing AI Responsibly

AI is changing how organizations operate — but it also introduces new risks. Bias, opacity, and misuse can all undermine its value. AI

Governance ensures your AI systems are fair, explainable, and aligned with your core values and legal responsibilities.

Embrace the power of AI with confidence by implementing

robust AI Governance, where fairness, transparency, and ethical alignment converge to unleash innovation without compromising integrity or exposing your organization to

risks.

DISC LLC Solutions for AI Governance

Our services include ethical AI guidelines, bias monitoring, performance audits, and more — ensuring your AI remains a force for good, not

risk. DISC InfoSec provides specialized virtual Chief AI Officer (vCAIO) services and information security consulting to

organizations navigating the evolving landscape of AI governance, cybersecurity frameworks, and regulatory compliance. We bridge the gap between traditional compliance frameworks and emerging AI

governance requirements.

Unlock the transformative potential of AI while navigating

its complexities with DISC's LLC solutions for AI Governance – your trusted partner in harnessing the power of artificial intelligence responsibly, ethically, and without

compromise.

Ready to Build a Smarter, Safer Future?

When Data Governance and AI Governance work together, your business becomes more agile, compliant, and trusted. Deura

InfoSec Group is here to help you lead with confidence.

Schedule a consultation today — and let’s build the future on a foundation of trust.

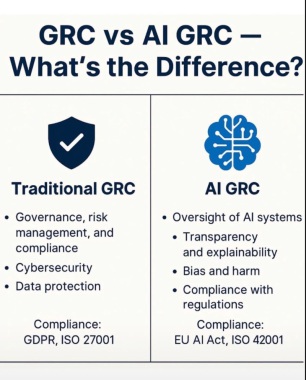

The Strategic Synergy: ISO 27001 and ISO 42001 – A New Era in

Governance

ISO/IEC 27001 and ISO/IEC 42001, both standards address risk and management systems, but with

different focuses. ISO/IEC 27001 is centered on information security—protecting data confidentiality, integrity, and availability—while ISO/IEC 42001 is the first standard designed specifically for managing artificial intelligence systems responsibly. ISO/IEC 42001 includes considerations like AI-specific risks,

ethical concerns, transparency, and human oversight, which are not fully addressed in ISO 27001. Organizations working with AI should not rely solely on traditional information security

controls.

While ISO/IEC 27001 remains critical for securing data, ISO/IEC 42001 complements it by addressing broader governance and accountability

issues unique to AI. The article suggests that companies developing or deploying AI should integrate both standards to build trust and meet growing stakeholder and regulatory expectations. Applying

ISO 42001 can help demonstrate responsible AI practices, ensure explainability, and mitigate unintended consequences, positioning organizations to lead in a more regulated AI

landscape.

We've integrated AWS Bedrock, OCR systems, and AI chat into a regulated environment. We know what actually works. One expert across AI

governance, InfoSec, and regulatory compliance. No coordination overhead, no conflicting advice.

Want to learn more about managing AI responsibly? Visit the DISC InfoSec blog for expert posts on AI compliance and governance.

AIMS and Data

Governace

Responsibility and Disruption must

coexist. Implementing

BS

hashtag#ISO42001

will demonstrate that you're developing

hashtag#AI

responsibly. Information Technology and Artificial Intelligence Management

System

You can’t have AI without an IA

A clever way to emphasize that Information Architecture (IA) is foundational to effective

Artificial Intelligence (AI). AI systems rely on well-organized, high-quality, and accessible data to function properly. Without strong IA—clear data structures,

taxonomies, metadata, and governance—AI can produce biased, unreliable, or even harmful results--In short: AI is only as good as the information it learns from—and

that's where IA comes in.

Specific users of BS ISO/IEC 42001 will likely be:

AI consultants

AI policy staff

senior staff looking to input/adapt AI into their business

AI solution and service providers, which could span across machine learning, NLP/natural language processing, computer vision

AI researchers

AI standards developers

user of management system standards

C-level management.

What does BS ISO/IEC 42001 - Artificial intelligence management system cover?

BS ISO/IEC 42001:2023 specifies requirements and provides guidance for establishing, implementing, maintaining and continually improving an

AI management system within the context of an organization.

The AI Readiness Gap: High Usage, Low Security - Databricks AI Security Framework (DASF) and the AI Controls Matrix

(AICM) from CSA can both be

used effectively for AI security readiness assessments

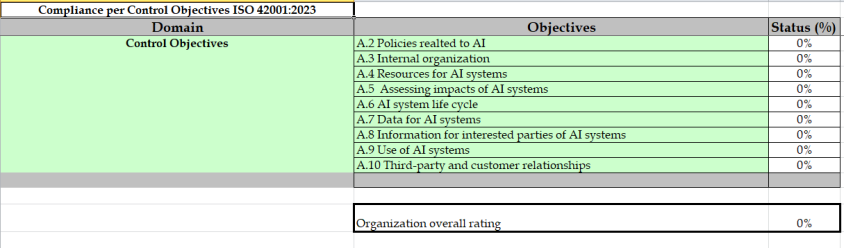

AI Governance Readiness

Assessment - AI Security Risk Assessment (EU AI Act)

Is Your Business Ready

for the Risks of AI?

A 10-day

fixed-fee service to assess your AI compliance, risk posture, and governance readiness.

Best

Practices:

- Conduct purpose assessments before data

collection

- Use synthetic or anonymized data where

possible

- Implement access controls and

retention limits

- Regularly audit data pipelines for

compliance

Stay ahead of the curve. For practical insights, proven strategies, and tools to strengthen

your AI governance and continuous improvement efforts, check out our latest blog posts on AI, AI Governance, and AI Governance

tools.

You don’t always need to hire a full-time CAIO — what you really need is AI Governance Leadership. In my latest post, I explain how businesses can build robust AI

oversight without expanding headcount. If you care about responsible AI deployment, compliance, and strategic governance, this one’s for you.

AI systems should be developed using data sets that meet certain quality

standards

Data Governance

AI systems, especially high-risk ones, must rely on well-managed data throughout training, validation, and testing. This involves designing systems thoughtfully, knowing the source and purpose of

collected data (especially personal data), properly processing data through labeling and cleaning, and verifying assumptions about what the data represents. It also requires ensuring there is enough

high-quality data available, addressing harmful biases, and fixing any data issues that could hinder compliance with legal or ethical standards.

Quality of Data Sets

The data sets used must accurately reflect the intended purpose of the AI system. They should be reliable, representative of the target population, statistically sound, and complete to ensure that

outputs are both valid and trustworthy.

Consideration of Context

AI developers must ensure data reflects the real-world environment where the system will be deployed. Context-specific features or variations should be factored in to avoid mismatches between test

conditions and real-world performance.

Special Data Handling

In rare cases, sensitive personal data may be used to identify and mitigate biases. However, this is only acceptable if no other alternative exists. When used, strict security and privacy safeguards

must be applied, including controlled access, thorough documentation, prohibition of sharing, and mandatory deletion once the data is no longer needed. Justification for such use must always be

recorded.

Non-Training AI Systems

For AI systems that do not rely on training data, the requirements concerning data quality and handling mainly apply to testing data. This ensures that even rule-based or symbolic AI models are

evaluated using appropriate and reliable test sets.

Organizations building or deploying AI should treat data management as a cornerstone of trustworthy AI. Strong governance

frameworks, bias monitoring, and contextual awareness ensure systems are fair, reliable, and compliant. For most companies, aligning with standards like ISO/IEC 42001 (AI management) and ISO/IEC

27001 (security) can help establish structured practices. My recommendation: develop a data governance playbook early, incorporate bias detection and context validation into the AI lifecycle, and

document every decision for accountability. This not only ensures regulatory compliance but also builds user trust.

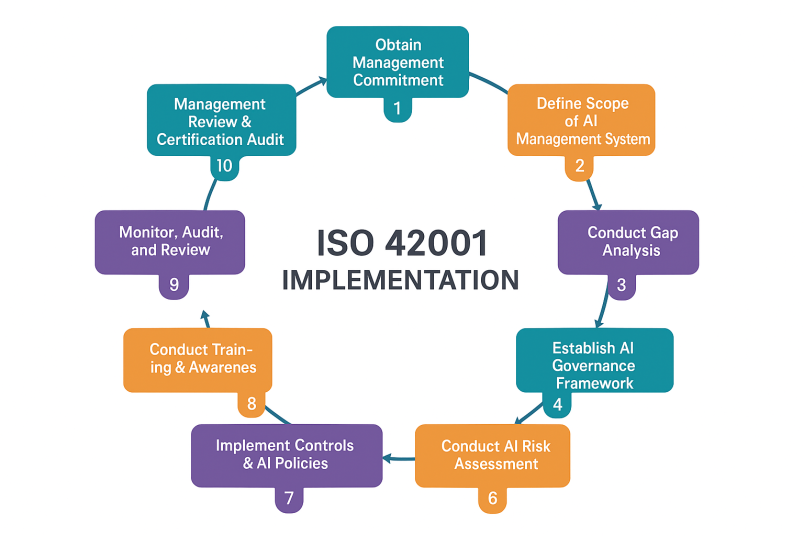

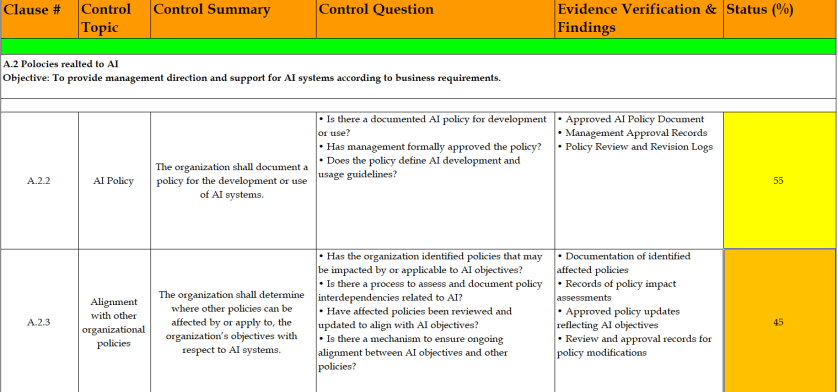

ISO 42001-2023 Control Gap Assessment

Unlock the competitive edge with our ISO 42001:2023 Control Gap Assessment — the fastest way to measure your

organization’s readiness for responsible AI. This assessment identifies gaps between your current practices and the world’s first international AI governance standard, giving you a clear roadmap to

compliance, risk reduction, and ethical AI adoption.

By uncovering hidden risks such as bias, lack of transparency, or weak oversight, our gap assessment helps you

strengthen trust, meet regulatory expectations, and accelerate safe AI deployment. The outcome: a tailored action plan that not only protects your business from costly mistakes but

also positions you as a leader in responsible innovation. With DISC InfoSec Group, you don’t just check a box — you gain a strategic advantage built on integrity, compliance, and future-proof

AI governance.

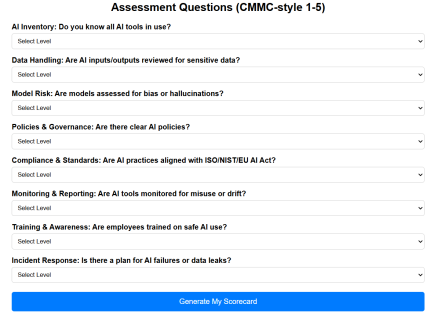

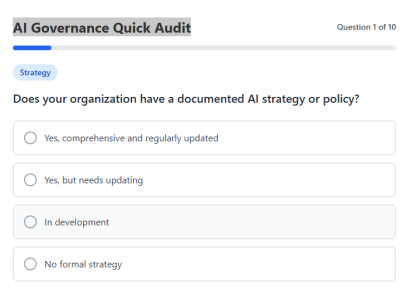

10

comprehensive AI governance questions

10

comprehensive AI governance questions  below

to open an AI Governance Quick Audit in your browser or click the image on the left side.

below

to open an AI Governance Quick Audit in your browser or click the image on the left side.